Training data

ToothFairyAI allows you to manage the datasets needed to train your AI models. This document provides an overview of the training data creation and import process.

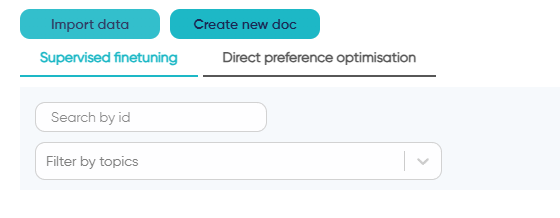

Menu location

Training data can be accessed from the following menu:

Training data > Create new doc

The fine-tuning section consist of the following tabs:

- Supervised finetuning: This tab allows the user to store datasets specific to the agents for supervised finetuning

- Direct preference optimisation: This tab allows the user to store datasets specific to the agents for direct preference optimisation

Creation of training data

The creation of the training data is ultimately comes down to the creation of a Live document with a specific structure.

As for the Knowledge Hub live documents, users can collaborate in real time to create the training data needed to fine-tune the AI models.

The fantastic feature of this approach is that the training data is a blend between real documents and structure embedded snippets aware of the content surrounding them.

The behaviour of the Live document is very similar to the Knowledge Hub live documents, with the following differences:

General fields

- Topics: The topics are used to filter the training data. The user can select the topics that are relevant to the training data

- Validation: It sets whether the document and the training data is used for fine-tuning the AI model or solely for validation purposes

- Add snippet: Conversational AI will show

ADD SYSandADD TURN.

The ADD SYS button allows the user to inject a system message into the document as an initial block right under the title of the document. The system block is by all means optional however we do recommend including it if the downstream model you want to generate via finetuning requires a strong agentic behaviour.

The ADD TURN button allows the user to add a new training snippet. More details below.

Supervised training snippets

The conversational AI snippet consists of the following fields:

- Role: The selection of the role of the AI model in the conversation. The user can select from the following roles:

- System: To inject a system message into the conversation

- User: To inject a user message into the conversation

- Assistant: To inject an assistant message into the conversation

- Message: It is highly recommended to alternate strictly between the user and the assistant messages and finish the conversation with the assistant message.

Direct preference optimisation snippets

The direct preference optimisation snippet consists for the most part of the same fields as the supervised training snippets.

In essence, the direct preference optimisation snippets are by all means identical to the supervised training snippets covered above with the addition of a DPO dedicated snippet.

The DPO snippet is used to specify the preferred and non-preferred assistant messages at the end of the conversation.

By default the role of the DPO dedicated snippet is Assistant and the preferred and non-preferred assistant messages must be specified to be able to create the DPO dataset.

Incomplete conversations will not be accepted in training data creation step for DPO.

Training data generation

The editor content outside the snippets is not used for the chat training data; however it can be used to provide additional information to the user reviewing the chat training data.

The user can delete the snippet by clicking on the Delete icon on the top right hand side of snippet

ToothFairyAI enforces the alternation between user and assistant roles at the time of the creation of the training data; this manifests in a semi-empty message with the appropriate role in between two consecutive snippets with the same role. We highly recommend to avoid these edge cases as they can significantly impact the quality of the dataset down the line.

Import of training data

ToothFairyAI allows you import data in the form of JSONL files that natively support the structure required by the AI models and blend with the data created in the platform through the Live documents.

File size limits per subscription:

- Starter: Maximum 2MB per file

- Pro: Maximum 5MB per file

- Business: Maximum 20MB per file

- Enterprise: Maximum 20MB per file

Supervised finetuning training data

The import of the training data is done by uploading a JSONL file. ToothFairyAI will not validate the content of the JSONL file, so it is important to ensure that the JSONL file is correctly formatted.

Each line of the JSONL file must strictly contain a JSON object with the following structure:

{

"messages": [

{

"role" : "system",

"content" : "System message"

},

{

"role": "user",

"content": "User message"

},

{

"role": "assistant",

"content": "Assistant message"

},

...

]

}

Direct preference optimisation training data

The import of the training data is done by uploading a JSONL file. ToothFairyAI will not validate the content of the JSONL file, so it is important to ensure that the JSONL file is correctly formatted.

Each line of the JSONL file must strictly contain a JSON object with the following structure:

{

"input": {

"messages": [

{

"role" : "system",

"content" : "System message"

},

{

"role": "user",

"content": "User message"

},

{

"role": "assistant",

"content": "Assistant message"

},

...

]

},

"preferred_output": [

{

"role": "assistant",

"content": "Assistant message"

}

],

"non_preferred_output": [

{

"role": "assistant",

"content": "Assistant message (non preferred)"

}

]

}

ToothFairyAI performs a shallow validation of the data structure at the time of the upload of the jsonl file; however in case of errors, the platform will not be able to provide a detailed report of the issue with the dataset during the finetuning process. In most cases you will be able to see the details of the error for any given Fine-tuning job in status InError